Mathletics Part III: Basketball

Like any other self-respecting basketball geek, the first part of Wayne Winston‘s new book Mathletics I read was Part III: Basketball.

Before diving into what I think about this part of the book, I think it’s important to spell out the intent of the book. Although it has math in the title, this book isn’t filled with a bunch of scary math.

Instead, this book is intended to introduce the reader to various models that are used to analyze sports, with the idea that some of these models are actually being used in practice by team management to aid in the decision making process. This means you’re not going to see a lot of discussion as to the theoretical underpinnings of the models. You’ll instead see practical implementation of the models.

So even though there is math in this book, it’s built from the ground up as the book progresses. If you have some prior experience with elementary statistics and regression then you should have no problems skipping ahead to the basketball part of the book.

Here are the chapters of the basketball part of Mathletics:

- Basketball Statistics 101

- Linear Weights for Evaluating NBA Players

- Adjusted +/- Player Ratings

- NBA Lineup Analysis

- Analyzing Team and Individual Matchups

- NBA Players’ Salaries and the Draft

- Are NBA Officials Prejudiced?

- Are College Basketball Games Fixed?

- Did Tim Donaghy Fix NBA Games?

- End-Game Basketball Strategy

I don’t want to give away all of Wayne’s hard work, but I do want to give you a sense of what you’ll find in these chapters of the book.

The first chapter, Basketball Statistics 101, introduces the reader to Dean Oliver‘s four factors of basketball success. Wayne also uses a regression to show how these factors relate to wins, in a similar fashion to this regression by Ed Küpfer, except that Ed looked at win percentage instead of total season wins. The only issue I have with Wayne’s regression is that it seems like there should be a way to transform the data to make the coefficients easier to interpret. This is mere speculation on my part, though. You can still interpret them without too much effort, and he shows you how to do so with a minimal amount of math.

I think it’s worth noting that if you haven’t done so already, it’s after finishing this chapter where you might want to read the paper A Starting Point for Analyzing Basketball Statistics.

The next chapters, Linear Weights for Evaluating NBA Players and Adjusted +/- Player Ratings, cover evaluating individual players. The reader is first introduced to the linear weight ratings of NBA Efficiency Rating, Player Efficiency Rating (PER), and Win Scores/Wins Produced. Because they’re based on the box score, we know that these ratings do not capture the entire game, and I like how Wayne details inadequacies with these models by showing how these metrics reward bad players for doing stuff they shouldn’t do.

After taking a tour of these measures, the Adjusted +/- Player Ratings chapter covers one way of estimating player value that attempts to equally weight a player’s offensive and defensive abilities using play-by-play data instead of the box score. I believe that Dan Rosenbaum‘s adjusted +/- article was the first public attempt to re-create what Wayne outlines in this chapter.

One thing that has always confused me about the various published adjusted +/- ratings is the use of minutes versus possessions as a measure of time in the model formulation. I’ll let you read the book to see exactly what Wayne does, but a lingering question I have is: “Why would I want to use minutes instead of possessions?” Speaking of exactly what Wayne does, at the end of the chapter he shows you how to use Excel Solver to find adjusted +/- ratings (you may also be interested in how Eli Witus‘ fit a similar model with Excel here and here, and you can find a wealth of ratings at Basketball Value).

The next two chapters, NBA Lineup Analysis and Analyzing Team and Individual Matchups, cover topics I’m very interested in. Adjusted +/- ratings are useful, but ultimately I want to know how players perform together and how they perform against other player combinations. These chapters give some insight into how Wayne does this for the Mavs (speaking of which, my sense is that these two chapters only cover a combined 9 pages because of these obligations).

These chapters give us a general idea of how these ratings are calculated, but we don’t exactly get a handy Excel tutorial to guide us through the process. One thing I don’t like about the lineup analysis chapter is the use of the standard error of the mean (at least as it’s used in the book) as a crutch to determine if one lineup is better than another. I think it’s more complicated than that. I don’t have a rigorous rebuttal to this, so I’ll simply say that it feels dirty to me. I usually like dirty, I just don’t like it in the world of math. I think this is certainly something worth studying, and I hope it turns out to be a case of me trying to make things more complicated than they have to be, which I tend to often do.

I like the examples given in the matchups chapter, and it makes me want to study the finer points of this type of analysis. Wayne gives an interesting example from the 2006 NBA playoffs to illustrate the point, and I would like to know how this holds on a larger level. He can’t give away the toolbox, but he has at least given motivation for things to look at.

The final chapters in this part of the book cover topics outside the realm of quantifying team strength and player value. The NBA Players’ Salaries and the Draft chapter explores a model for determining if the NBA draft is efficient. I’ve never studied this kinda thing, so the results were interesting to see.

In the next chapters, Are NBA Officials Prejudiced? and Did Tim Donaghy Fix NBA Games?, Wayne shows how we might try to analyze these topics, and what conclusions we’d want to arrive at based on the analysis.

The final chapter of the basketball part of Mathletics is End-Game Basketball Strategy, where we want to know what shot to take when down by 2, and if we should foul when we’re up by 3. This is a topic that intrigues me, especially after reading the paper Optimal End-Game Strategy in Basketball, where I felt the restrictions on the model were perhaps too simplistic, which is again a case of me maybe making things more complicated than they have to be. I’m not alone, though, as Wayne notes he is working on a simulation model to help solve this problem, which is something that makes a lot of sense. The good news is Wayne presents some general results and performs a sensitivy analysis on the model, which gives you an idea as to what conclusions you would draw for various inputs to the model.

Summary

I have a hunger for information, so of course Mathletics was simply a large dinner that will only hold me over for so long.

That said, I think that the book does a great job of accomplishing its goal: introducing the reader to real world models used in analyzing sports. This of course assumes Wayne has done as good a job with the other parts of the book as he’s done with the basketball part, but I don’t think that’s too much of a stretch.

To read more of Wayne’s work related to the book, see his blog at WayneWinston.com, and you can follow Wayne on Twitter @winston3453.

A Basic Hierarchical Model of Efficiency

In my last post on retrodicting team efficiency, I set a general baseline that can be used help determine if a new model of team efficiency makes better predictions than a naive model. This is important, as we want to know if added model complexity is worth the hassle.

This post will present a basic hierarchical model of efficiency, and we’ll determine how much better this model is in terms of year to year predictions.

The Model

Like the classical adjusted plus/minus efficiency model, this basic hierarchical model for efficiency is constructed so that we make predictions about the mean number of points scored on a given possession between an offensive and defensive lineup. With this model, though, we consider all player’s offensive and defensive ratings to come from a normal distribution, where we consider two distributions: one for offensive ratings, and one for defensive ratings.

Mathematically, we can write this model as follows:

[latex]Y_{i} \sim {\tt N}(\beta_{0} + \beta_{1} + O_{1} + \cdots + O_{5} + D_{1} + \cdots + D_{5}, \sigma^{2}_{y}) \\ O_{i} \sim {\tt N}(0, \sigma^{2}_{o}) \\ D_{i} \sim {\tt N}(0, \sigma^{2}_{d})[/latex]

Where [latex]\beta_{0}[/latex] estimates the average number of points scored on a possession on the road, [latex]\beta_{1}[/latex] estimates the home court advantage for when the offense is at home, and [latex]O_{i}[/latex] and [latex]D_{i}[/latex] estimate the offensive and defensive ratings for each player, respectively.

Also, because we have to use a Bayesian analysis, all of the parameters and hyperparameters of this model are given non-informative prior distributions.

The Player Ratings

The tables below list this model’s estimated top 10 offensive, defensive, and overall players from the 06-07, 07-08, and 08-09 seasons.

[table id=3 /]

[table id=4 /]

[table id=5 /]

As far as uncertainty in the ratings is concerned, we wouldn’t say that any one of these players is better than the other. Also, there are some cases in which we wouldn’t say that a given player is better than the average player.

Retrodiction Results

Obligatory top 10 lists aside, the real interest is in terms of predictions. Using the retrodiction methodology laid out in the post on retrodicting team efficiency, the ratings from the previous year’s model are used to predict the offensive, defensive, and net efficiency ratings of each team in the next season knowing game location and the 10 players on the court.

The following table lists the results:

[table id=2 /]

The difference in the classical model’s mean absolute error (MAE) and root mean squared error (RMSE) is listed under the MAE Diff and RMSE Diff columns. These are calculated by subtracting the classical model’s result from the hierarchical model’s result. In other words, negative numbers mean our predictions are doing better than the classical model.

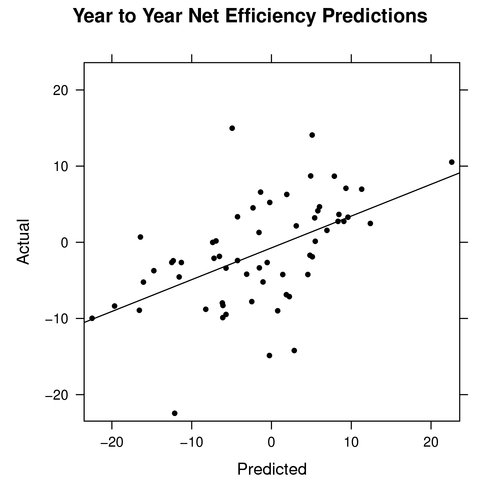

Again, one area of interest is in the net efficiency. The graph below shows the predicted net efficiency versus the actual net efficiency:

Unlike the classical model, this model does not predict teams to have very large or small net efficiency ratings, as most fall within +/- 10 points. This is probably a good thing, since most of the actual ratings fall within this range as well.

Summary

Based on the results above, I consider this basic hierarchical model to better predict efficiency over the classical model.

There is, however, still room for improvement, as we again have some weird results where we predict a net efficiency of -5, yet the actual net efficiency is 15.

Randomness will always give us imperfect results, but this result seems like one that is worth investigating in an effort to provide insight into how we can construct a better model of efficiency.

Retrodicting Team Efficiency

Regardless of the many models we may examine, we are ultimately looking to predict team efficiency. To move in this direction, we have to know how well the most basic methods predict team efficiency in order to determine if more complex methods are actually better than a simpler model.

Retrodiction versus Prediction

To best assess differences in model predictions, we will use a process called retrodiction. What this means is that we will actually use some future information when making our predictions.

The reason we do this is because we want to compare models that predict efficiency by knowing relevant information, such as: who is on the court, location of the game, etc. We are confident players will be injured in the upcoming season, so trying to predict injuries just muddies the water when analyzing how well models predict the offensive and defensive efficiency of one lineup versus another.

This sort of analysis is the basis behind Steve’s retrodiction challenge. I want to take advantage of more future information than just minutes played, so I doubt I’ll have much to contribute to this challenge. I’m certainly interested to see how the various models perform against each other, though.

Measuring Prediction Error

In addition to estimating the mean and standard deviation of the error distribution, we will further measure prediction error in two ways:

Mean Absolute Error = [latex]\displaystyle\frac{1}{n} \sum_{i=1}^{n} |predicted_{i} – actual_{i}|[/latex]

Root Mean Squared Error = [latex]\displaystyle\sqrt{\frac{1}{n} \sum_{i=1}^{n} (predicted_{i} – actual_{i})^{2}}[/latex]

The mean absolute error (MAE) will give us a handle on the average prediction error, and the root mean squared error (RMSE) will penalize us more for predictions that are further from the actual values.

The idea is that if two methods have the same mean absolute error, we would tend to prefer the method that has the lower root mean squared error. Regardless, our goal is to minimize these errors.

These error statistics will be calculated for the offensive, defensive, and net efficiency predictions we make for each team.

The Model

The model I’ve chosen to use is one of the many formulations of Dan Rosenbaum’s adjusted plus/minus model, and can be written as follows:

[latex] y_{i} \sim {\tt N}(\alpha + \beta_{O1} + \cdots + \beta_{O5} + \beta_{D1} + \cdots + \beta_{D5}, \sigma_{y}^{2})[/latex]

Where [latex]y_{i}[/latex] is the number of points scored on possession [latex]i[/latex] between the offensive and defensive lineups associated with this possession. In this model, I use [latex]\alpha[/latex] to measure home court advantage. It is in the model when the offensive team is at home, and not in the model when the offensive team is on the road. The traditional intercept has been removed from this model.

I have used the observations from all players when fitting this model. This was done to motivate the exploration of models and methods that take all players into account, not just the ones that have played up to some cutoff.

Prediction Error

Using the fits of these models, a prediction was made for the number of points scored on each possession in the next season. These predictions were only made if the 10 players on the court were in the model fit to the previous year’s data. These predictions were then aggregated for each team to calculate the predicted offensive, defensive, and net efficiency for each team.

[table id=1 /]

This table shows that, on average, our predictions were off by 4.7 and 4.0 points for offensive efficiency, 3.6 and 5.0 points for defensive efficiency, and 6.0 and 7.2 points for net efficiency. These are in terms of efficiency ratings, and thus they are in terms of points per 100 possessions.

The most interesting error is associated with net efficiency. This is because net efficiency tells us if we predicted the team to have a positive or negative net efficiency rating. In other words, this tells us if we predicted a team to be above or below average.

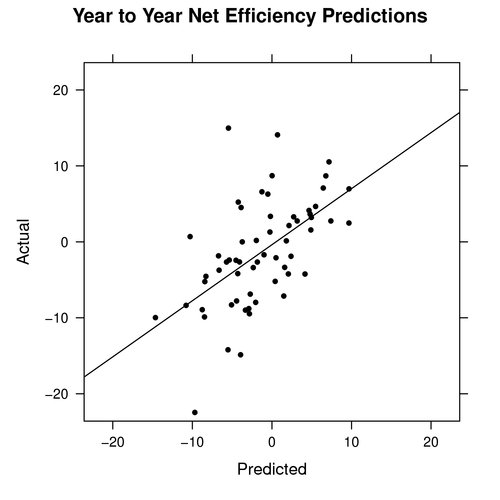

The graph below shows the predicted versus actual net efficiencies for the year to year predictions in 06-07 to 07-08 and 07-08 to 08-09:

The highest predicted net efficiency was with 07-08 Boston Celtics. This model predicted their net efficiency rating to be 22.6, but their actual observed net efficiency rating was 10.5. The lowest predicted net efficiency was with the 08-09 Memphis Grizzlies. This model predicted their net efficiency rating to be -22.5, but their actual net efficiency rating was -10.0.

It is important to remember the model and player restrictions imposed when making these predictions, as it leads to some funky stuff. This can be a good thing, though, as it should help drive efforts to understand how to better model an individual’s impact on team efficiency.

As an example, the 08-09 Bulls were predicted to have a 15.0 net efficiency, while the actual net efficiency was -5.0. This prediction came from less than 900 possessions, as guys not in the previous year’s model dominated court time. Looking at the individual lineup combinations used to construct this prediction may provide insight into why this prediction was so high.

Summary

The results above help to show how a very basic model of individual players predicts team efficiency, and it should set a baseline for how well a new model or method should predict.

This model does nothing to take into account how players change from season to season, such as getting more experience or declining in physical condition, thus that would be one area for improvement. Another area for improvement could come in constructing a model that helps deal with small sample sizes. We might also want to use more than a single season’s data when fitting this model.

There is much exploring to do, but I am most interested in how game state affects our efficiency expectations. Therefore, I plan on exploring more than simply home court advantage in future model constructions. It is also important to make predictions for all players, such as rookies and other players not in the previous year’s model, so I plan on determing how we might best make these predictions.

My Talk at the 2009 Southeastern Ranking and Clustering Workshop

My attempts to record the talk failed due to what appears to be a broken Flip Mino. (Note to self: don’t upgrade the software on a working product the night before you plan on using it.)

All is not lost, as you can download the slides for my talk:

In addition, you can download the code and data used in the creation of the presentation by downloading:

- rw09.zip: View the README file for information on where everything is and what it does

Since some parts of the talk are better explained, I’d be happy to answer any questions you have. So just leave a comment if you have a question!

What I’ve Learned Over the Past Year

One year ago today I published my first post, so I wanted to go through the past year and see what stuff sticks out, for better or worse. Regardless of the end result of a lot of my work, I know I sure have learned a lot along the way.

If you’ve been reading for some time, then I hope you’ve enjoyed the journey with me so far. If you’re new, then I hope you enjoy this tour through the past year.

- I started off on a kick wanting to collect new data. New data is vital for further understanding, but when you don’t have a good foundation to work with then new data is more noise than help. I realized this, which is why I’ve not been collecting much new data, even as important as that is.

- Speaking of new data, I’ve been slowly making play-by-play data available in a CSV format so that it is easy to work with. The data files also provide data, like shot locations, that has been hard to get in the past.

- The rough outline of a theoretical model for the probability of winning a basketball game at the team, unit, and individual level gave me a lot to think about in terms of how a team comes together to score points.

- Trying to figure out substitution patterns is an interesting problem, and one step in this direction is looking at the average number of starters in the game based on time and by lead. The joint problem of time and lead is still left to be solved.

- In November I was inspired to put together some bridge jumper projections for the rest of the NBA season, and looking back I don’t really see how it’s very useful. It was fun to put together, but I don’t think I’ll be doing anything like this in the future.

- Figuring out how one unit of players will fare against another is important, and I took a theoretical and practical look at trying to assign efficiency ratings between two competing units.

- Adjusted plus/minus is something that took me a long time to fully wrap my head around. My naive points added contribution helped me figure it out. My modeling skills have come a long way since then, but at that time working with things like grouped data and the like were not second nature to me, but now they are. I think we can throw points added in the trash, but it was an important step in the direction of figuring out adjusted plus/minus.

- Using numerical methods to adjust statistics is controversial (in the NBA, at least), but these methods help in college sports where there is a large disparity between level of competition. I applied some methods to 3pt shooting, efficiency ratings, and rebounding rates to show how we might use these methods in the NBA. You don’t seem to get much at the team level, but I think there is more to be explored at a unit and player level.

- Basketball on Paper’s skill curves are an informative look at how a player’s efficiency relates to their role in the offense, and you too can create them yourself (even though the exact way Dean created the curves is still locked away in his vault).

- I would like to understand defense better (who wouldn’t?), and my post on the defensive four factors and shooting versus defensive efficiency were basic steps at that. This isn’t surprising, but shooting is the most important predictor of defensive efficiency.

- We want to know how players fit together and what their production would look like as a unit. I took a look at shot distributions and shooting percentages, but there is much more to do in this area.

- My post on referee efficiency ratings turned out to be one of the best learning experiences all year. Refs are always a touchy subject, and I didn’t provide evidence to make some of the statements I made. As one reader pointed out, referee stuff is “very hard to do right”. He’s right, and the discussion that followed based on this post was very informative. I feel that I’ve yet to acquire the tools needed to do a ref analysis real justice, so I’ve left it alone for now.

- Plays are the basic building blocks of basketball, and there is much to explore: how plays end, how a play is likely to end based on how it started, and how long it takes for plays to end are just a starting point.

- Multilevel and hierarchical models seem to me to be very applicable to problems we deal with in analyzing sports data. We tend to know something more about these players, units, or teams that we analyze other than simply the sample of data we observe. I’ve finally started to piece these types of models together and have applied them to a couple of NBA data sets: 3pt shooting statistics, offensive rebounding rates, and defensive fouls drawn and committed.

So that’s my best summary of the past year. It’s not a complete rundown of every post, so make sure you check the archives if you’re thirsty for more.

My Future Focus

While working on a presentation for an upcoming talk about sports rating, I realized that I’ve yet to really try to predict anything. When we talk about why one model might be better than another, one aspect has to do with predictive capabilities.

Even though fitting data helps answer some questions, my focus over the immediate future will be on measuring prediction capability, as I want to get an idea of what models of past data tells us about the future. I have a lot of ideas that I hope I will get to sooner rather than later. 🙂