The Time Distribution of Events in the NBA

- 9 Comment

In my quest to create a realistic simulation of the NBA, I’ve come to the point in which I need to answer an important question: how long does it take for an event to occur after the start of a play?

We don’t actually have to care about the time distribution of events to simulate and make inferences about most player versus player aspects of the game. That said, there are some important aspects of the game that are directly tied to time. By using time, we will be able to better examine how the time-to-penalty situation impacts a team’s efficiency. Although my direct focus as of now is on fouls, other aspects, like strategy, have timing implications, too.

Estimating The Distributions

The data used to estimate these time to event distributions was extracted from the 06-07 to 08-09 regular season’s play-by-play data. This data is represented as the number of seconds elapsed from the start of the play to the time of the play ending event, all conditional on how the play started.

Thanks to a tip from @revodavid, I used R‘s density() function to perform kernel density estimation on the data. I’m certainly no expert with this stuff, but for some reason setting adjust to 0.5 (half the default bandwidth) garnered results more to what I was expecting. I don’t want to get too crazy altering the default results, though, as the idea isn’t to follow every little bump in the data, but rather to intelligently smooth the data to provide a good approximation. This isn’t life or death stuff here, so I figure it will be good enough for now.

One thing to point out with the data is that the times aren’t perfectly measured. Time is continuous in nature, yet (prior to the 2009 playoffs, at least) we never see fractional seconds in the play-by-play. The way the data is collected is also inexact. The shot events below show events that last past 24 seconds. Aside from actual errors in the time stamp on each play-by-play event, the shot event time isn’t actually recorded when the shot is taken. Thus we expect to run off more than 24 seconds for some shots.

Time to Shot Events

To illustrate how long events take to occur, I’ve decided to show the estimated probability distributions for the time to shot events. These shot events include all 2pt and 3pt makes, misses, and shooting fouls drawn.

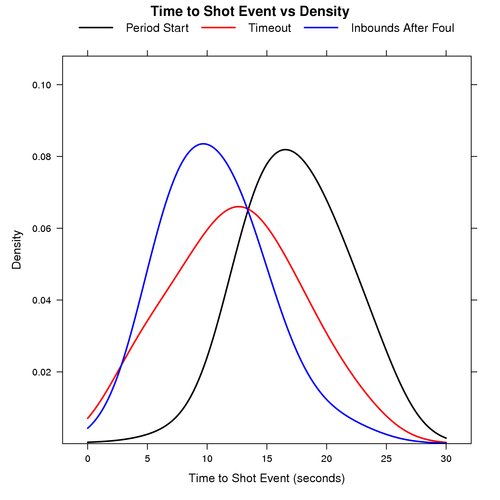

Period Start vs Timeout vs Inbounds After Foul

The graph below shows the probability distribution for the number of seconds that elapse before a shot event for plays that start at the beginning of a period, after a timeout, and inbounds after a foul.

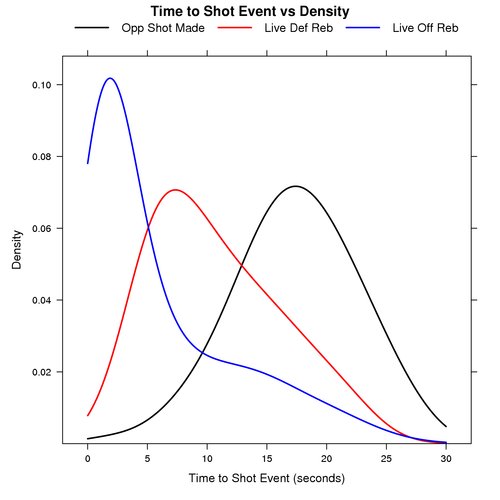

Opponent Shot Made vs Live Def Reb vs Live Off Reb

The graph below shows the probability distribution for the number of seconds that elapse before a shot event for plays that start after an opponent’s made shot, a live defensive rebound, and a live offensive rebound.

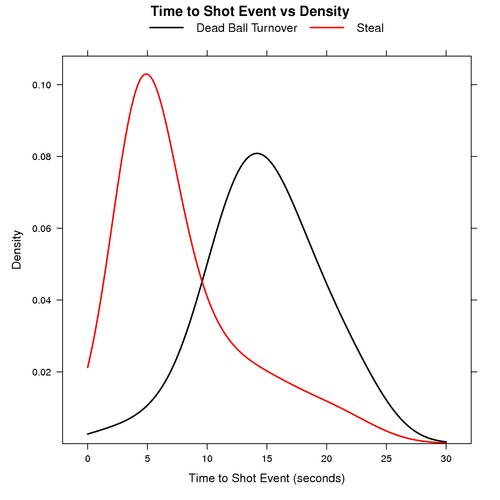

Dead Ball Turnover vs Steal

The graph below shows the probability distribution for the number of seconds that elapse before a shot event for plays that start after a dead ball turnover and steals.

Explore These Distributions

The graphs above only illustrate the time to event distributions for shot events. There are other events like personal fouls and turnovers that warrant their own time to event distributions for simulation purposes.

You can use the following files to further examine these and other time to event distributions:

- times.R – This R script creates the graphs above, and has some code that can be used to examine the distributions for personal fouls and turnovers.

- times.csv – This CSV data file contains the elapsed times extracted from the play-by-play from the 06-07 to 08-09 regular seasons.

In the times.csv data file you can see the play starting events and the play ending events that you can then examine with times.R.

Summary

By estimating these distributions, we can now get a general idea as to how much time elapses for various NBA events. This will provide a starting point for being able to realistically simulate actual NBA periods versus simply X number of possessions.

One question worth answering is how useful quick shot or drain the clock strategies are. This opens up a lot of other questions such as: what kind of field goal percentages and turnover rates can we realistically expect using these strategies? Hopefully this is a starting point towards moving in that direction.

9 Comments on this post

Trackbacks

-

Gabe said:

“The shot events below show events that last past 24 seconds. Aside from actual errors in the time stamp on each play-by-play event, the shot event time isn’t actually recorded when the shot is taken.”

I think part of the reason for this might be that the two clocks (game and shot) don’t start at the same time. One starts immediately after a shot is made, while the other starts when the ball is inbounded. I can never remember which is which though.

June 30th, 2009 at 8:51 am -

Ryan said:

Yeah, after a made shot the game clock (outside of 2 minutes, I think) runs, while the 24 second clock doesn’t start until the ball is touched after the inbounds. I know late in games the game clock will stop after a made shot, but I don’t know exactly when that happens.

June 30th, 2009 at 11:31 am -

Ryan said:

Here’s what I found from the NBA’s website:

b. The timing devices shall be stopped:

(1) During the last minute of the first, second and third periods following a successful field goal attempt.

(2)During the last two minutes of regulation play and/or overtime(s) following a successful field goal attempt.June 30th, 2009 at 11:36 am -

Mark said:

Neat stuff. I’m not an expert on the kernel density estimation either, but the book cited in the R help file explaining the default rule of thumb bandwidth basically says it’s pretty good for a wide range of models, but also that ‘If the purpose of density estimation is to explore the data … choose the smoothing parameter subjectively…’ Undersmoothing – using a fairly low bandwidth, is also described as preferable when trying to display data for others.

From this, I think you should feel comfortable modifying the bandwidth, especially if you’re unclear that there’s a specific ‘standard’ underlying probability distribution. The rule of thumb is described as being good for t-distributions, log-normal with skewness up to about 1.8 and normal mixture with separations up to three standard deviations. I have no clue whether your data would fall under these categories.

Anyway, your graphs seem to do a good job of showing the (I think very interesting) phenomena, so, whatever works.

June 30th, 2009 at 3:18 pm

The Time Distribution of Events in the NBA

https://pamelamorganlifestyle.com/baby-clam-sauce-with-spaghetti-2/

The Time Distribution of Events in the NBA

https://cloudlab.tw/javascript-依照元素出現數量排序/comment-page-27271/

The Time Distribution of Events in the NBA

http://www.kreditinformacija.lv/kaaa-veido-konferenci-lai-skaidrotu-kreditinformacijas-apmainu-latvija/

The Time Distribution of Events in the NBA

https://vonghophachbalan.com/san-pham/amber-baltic/

The Time Distribution of Events in the NBA

https://www.terminallaplata.com/component/k2/item/47-medias-adoptadas-para-el-enfrentamiento-de-la-emergencia-sanitaria-covid-19.html?start=155050