2008-09 Offensive Statistical Scouting Reports

Late last year I started putting together some statistical scouting reports for lineups of players in an attempt at trying to understand how combinations of players worked together. Nothing great came of that, but the general idea of putting together a statistical scouting report for players has interested me.

The general idea of the report is to give a quick idea of what to expect from a player given the team, system, and role they currently play under. These won’t exactly tell me which players are the best at a given skill, but it will tell me how players are performing in their given situations.

With that in mind, I’d like to present a first look at offensive scouting reports from the 2008-09 season. For each player you will find a variety of offensive measures that will help give you an idea as to what players do on the court.

With the exception of offensive ratings, all statistics were estimated with multilevel models (similar to this one, for example), where players are grouped by their designated position. The point of fitting these models is to provide realistic estimates of the statistics, but also to provide realistic estimates about the uncertainty we have about these estimates. Therefore, you will find an estimate and range for each statistic, where the estimate refers to the estimated mean and range refers to a 95% confidence interval.

I’ve tried to make everything as self explanatory as possible, but let’s look at LeBron James’ statistical scouting report as an example:

- Offensive Rating: From Basketball on Paper, this is the number of points LeBron produces per hundred possessions he uses while on the court.

- Usage: The proportion of possessions LeBron uses while on the court.

- Own Shots Assisted On: When LeBron makes a shot that a teammate assisted with.

- Teammate’s Shots Assisted On: When a teammate makes a shot that LeBron assisted with.

I hope everything else explains itself, but if not, feel free to leave a comment. Speaking of comments, I’d like to hear your thoughs on the good and bad of the reports. Clearly defensive information is a must, but what offensive information am I missing (or is perhaps unnecessary)?

Also, there are some weird things in the data (like Adonal Foyle showing up with the Memphis Grizzlies). I appreciate any help in finding other similar cases of weirdness.

Lastly, the ultimate goal is to translate these numbers into words, such as “this player is a good shooter”, or “this player is a bad passer”. What sort of statements would you like to see being made about players (good rebounder, average shot blocker, etc)?

With the work now done for the 2008-09 season, I hope to have the 2009-10 reports up soon. Until then, let me know what you think!

My Poster at Cha-Cha Days 2009

This weekend is Cha-Cha Days 2009 at the University of Central Florida, and I will be presenting a poster on some research I’ve done for my senior research and writing project at the College of Charleston.

The title for my poster is Modeling Basketball’s Points per Possession, and the motivation for this work comes from wanting to figure out how to best model the number of points teams score or allow on a possession. The first step in accomplishing this goal is to find a modeling technique that could have plausibly generated the actual data observed, and the poster presents the results of this process.

In the poster below you will find graphs that illustrate the difference between the actual frequency of points scored on a possession along with the simulated frequency of points scored on a possession, where the simulated data comes from the fitted models. These graphs illustrate the various models examined, and they cover linear, poisson, negative binomial, zero altered poisson (ZAP), and multinomial logistic regressions.

Using a chi-square goodness of fit test, the multinomial logistic regression is the only model that could have plausibly generated the actual data we observed. An example of the fit of this model is shown on the right hand side of the poster, where the model fit for the 2008-09 Orlando Magic offense is shown. This model was fit with the following predictors:

- Reserves: The number of opponent reserve players in the game. This value ranges from zero to five.

- Penalty: An indicator taking the value one when the defensive team is in the penalty and a zero when not.

- Players: Indicators for each player that take the value of one when the player is on the court and zero when not.

The p-values shown in the table of coefficients were calculated using a likelihood ratio test, and they allow us to test if these predictors are useful.

Using this fit for the Orlando Magic, the remaining graphs illustrate the estimated difference between Dwight Howard and his backup Marcin Gortat along with the estimated difference between being in the penalty versus not being in the penalty.

Please comment if you have any questions or feedback relating to the research and/or poster!

A direct link to the poster can be found here.

Individual Defensive Efficiency Ratings Extracted from Play-by-Play Data

In my last post I presented individual offensive efficiency ratings that were extracted from play-by-play data. In this post I will present individual defensive efficiency ratings that I have extracted from play-by-play data.

As with the individual offensive efficiency ratings, I’ve constructed these individual defensive efficiency ratings in a similar fashion as Dean Oliver does in Basketball on Paper.

Calculating Individual Defensive Efficiency Rating

The purpose of the individual defensive efficiency rating is to estimate an individual’s impact on the number of points their team allows per hundred possessions the individual is on the court. As Dean explains in Basketball on Paper, there is a lot of defensive data left to collect that would allow us to better understand defense numerically.

Thanks to the play-by-play, a small fraction of this data is available to us so that we do not have to approximate it from the box score (such as the number of free throws players allow by way of fouls). We still, however, do not have data for key elements of defense, such as:

- Number of field goals defenders force to be missed or allow to be made

- Number of turnovers defenders force the opponent to commit

Even with this data, there is a case to be made for how coaching impacts individual defensive efficiency ratings. We can’t overlook this, even if we don’t yet have a way to quantitatively estimate this coaching effect with the data we have available to us.

That said, here is a list of the things defenders do that impact defensive efficiency:

- Allowing shots to be made

- Preventing shots from being made (like blocking shots)

- Forcing turnovers (like stealing passes and taking charges)

- Grabbing defensive rebounds

- Fouling opponents (that lead to free throws)

These events lead to the opponent scoring zero or more points, and credit is assigned as follows:

Assigning Credit: Made Shots

Because we don’t have information pertaining to which defender(s) contested the shot, all defenders receive 20% credit for allowing a field goal to be made.

Assigning Credit: Free Throws

Defenders that commit fouls that lead to made free throws are assigned full credit for allowing the opponent to score these points.

Assigning Credit: Turnovers

When the opponent commits a turnover, we currently have three ways of assigning credit. First, when there is a steal, the defender credited with the steal receives full credit for forcing the turnover. Second, when there is an offensive foul turnover, the defender credited with drawing the offensive foul receives full credit for forcing the turnover. Lastly, all defenders receive 20% credit when we do not have explicit defender information associated with a turnover.

Assigning Credit: Defensive Rebounds

On defensive rebounds, the player forcing the shot to be missed receives credit proportional to:

[latex]FMweight=\displaystyle\frac{(DFG\%)(1-DOR\%)}{(DFG\%)(1-DOR\%) + (1-DFG\%)(DOR\%)}[/latex],

where DFG% is the defensive team’s probability of forcing the opponent to miss, and DOR% is the defensive team’s probability of allowing an offensive rebound. As Dean discusses in Appendix 3 of Basketball on Paper, this formula estimates the relative difficulty between forcing the opponent to miss a shot and obtaining a defensive rebound.

When there is a block, we give the player that blocks the shot credit equal to [latex]FMweight[/latex]. The player that rebounds the shot is then assigned credit equal to [latex]1-FMweight[/latex].

If there is no block, then the credit for forcing the missed shot is distributed evenly between the five defenders. This means each defender gets credit equal to [latex]\frac{1}{5}(FMweight)[/latex].

Similar rules are applied when there is a team defensive rebound with and without a block.

The Defensive Ratings

Below is a list of the players that have the top 15 defensive ratings from the 2008-2009 regular season (minimum 500 defensive possessions used):

[table id=6 /]

The following spreadsheet lists the defensive ratings for each player from the 2008-2009 regular season (including other applicable statistics):

08-09 Basketball on Paper Defensive Ratings from Play-by-Play

The data is grouped and sorted by teams and players, and it contains the following data:

- Drtg: the player’s defensive efficiency rating

- Std Err: the standard error of the rating

- 95% CI: a 95% confidence interval for the rating

- Usg%: the percentage of possessions used by this player while on the court

- Total Used: the total number of possessions this player used

- %Shots: percentage of possessions used that were shots

- %Fouls: percentage of possessions used that were fouls

- %Drebs: percentage of possessions used that were defensive rebounds

- %Turnovers: percentage of possessions used that were turnovers

Not a Perfect Measure of Defense

I believe these ratings give us a little better look at defense than what we glean from the box score, but these ratings aren’t exactly perfect either. There is still much data we’re not building in, specifically which defenders are contesting which shots (other than those shots that are blocked). Even with this data, there are still issues with splitting credit between teammates since, for example, a player not contesting a given shot could be responsible for allowing the shot to take place and should split some credit with the contesting player.

With these difficulties in mind, my hope is to construct similar defensive ratings using counterpart information to attempt at figuring out which players are perhaps responsible for the opponents shots. With this data we could then rate both offensive and defensive ratings by taking into account the level of competition on both sides of the ball.

Individual Offensive Efficiency Ratings Extracted from Play-by-Play Data

I’m unsatisfied with the usefulness of individual efficiency ratings that estimate the offensive and defensive impact of a player on a lineup’s efficiency by simply controlling for the strength of teammates and opponents. This is because these ratings don’t really give any insight into what the individual players are actually doing. These ratings are simply not well parameterized in the form of the actual things that players do on the court.

Therefore, I’m going to explore the methods of calculating individual ratings that Dean Oliver outlines in Basketball on Paper. The only difference between what Dean has done and what I will do is that I will use play-by-play data instead of box score data.

Dean’s Offensive Efficiency Rating

Before extracting individual offensive efficiency ratings from the play-by-play data, I had to first figure out how to translate Dean’s formulas that estimate ratings from box score data into something I could apply to individual possessions in the play-by-play data. I learned a lot during this process, so I think it is worthwhile to outline the general idea of the formulas here.

Figuring out how many possessions a player is responsible for and the number of points a player produces go hand in hand. Once you know what percentage of a possession a player is responsible for, then you can multiply that percentage by the number of points scored to estimate the number of points the player produced. Note: I actually calculate these in terms of scoring sequences inside of each possession to take care of the rare situations when there is an offensive rebound that leads to points after a missed free throw, where the free throw attempt was the result of a made field goal+shooting foul (these sequences are normalized to ensure a total of one team possession is used).

A player uses a possession and produces points in one of the following ways:

- Making a field goal or free throw

- Assisting on a field goal

- Obtaining an offensive rebound that leads to a made field goal or free throw

- Missed field goals or free throws that are rebounded by the defense

- Turnovers

Clearly a player produces zero points when they miss a field goal, miss a free throw, or commit a turnover. When a player makes an unassisted field goal or free throw they receive full credit for using the team possession and producing the number of points scored.

When there is an assist made on a field goal, the player making the field goal receives the following portion of credit:

[latex]1 – \frac{1}{2}(eFG\%)[/latex],

where eFG% is the effective FG% of the shot attempt. The player assisting on the shot receives the following portion of credit:

[latex]\frac{1}{2}(eFG\%)[/latex]

Dean’s theory behind these formulas is that easier shots are harder to assist on. Thus if a player assists on an easy shot, they should get more credit than a player assisting on a harder shot.

The last thing we have to take care of is offensive rebounds. I’ll leave you to Appendix 1 of Basketball on Paper for the full theory, but the idea is that when an offensive rebound leads to points we want to give credit to the offensive rebounder that is proportional to how important the offensive rebound is to the team. The formula is:

[latex]\displaystyle\frac{(1-TeamOR\%)(TeamPlay\%)}{(1-TeamOR\%)(TeamPlay\%) + (TeamOR\%)(1-TeamPlay\%)}[/latex],

where TeamOR% is the team’s probability of obtaining an offensive rebounding, and TeamPlay% is the team’s probability of scoring at least one point on a play.

With these formulas we can now give credit to the players when examining each individual possession in the play-by-play data. Credit is given out as each possession is encountered in the play-by-play, so we don’t care about any of the other stuff in Dean’s box score formulas.

Estimating eFG%, TeamOR%, and TeamPlay%

One thing that we do care about is estimating effective FG%, TeamOR%, and TeamPlay%.

Because the play-by-play allows us to obtain details such as the location of shots players assist on, it is important to come up with a reasonable expectation on the eFG% for these shots so that we give credit appropriately. To do this, I fit multilevel models by position for every player for the following shot locations:

- Low Paint Shots: Shots inside the paint <= 6 feet from the hoop

- Short 2pt Shots: Shots <= ~14 feet from the hoop

- Long 2pt Shots: All other 2pt shots

- Corner 3pt Shots: Corner 3pt shots

- Other 3pt Shots: All non-corner 3pt shots

These models give us reasonable expectations on the eFG% of shots and should give better insight into the expected eFG% of all shots that are taken.

To estimate TeamOR% and TeamPlay%, I fit two logistic regressions that used the actual team versus team data. This allows me to estimate TeamOR% and TeamPlay% for any competing teams so that for each game a fair offensive rebounding weight can be calculated.

The Offensive Ratings

The following spreadsheet lists the offensive ratings for each player from the 2008-2009 regular season (including other applicable statistics):

08-09 Basketball on Paper Offensive Ratings from Play-by-Play

The data is grouped and sorted by teams and players, and it contains the following data:

- Ortg: the player’s offensive efficiency rating

- Usg%: the percentage of possessions used by this player while on the court

- Total Used: the total number of possessions this player used

- %Shots: percentage of possessions used that were shots

- %Free Throws: percentage of possessions used that were free throws

- %Assists: percentage of possessions used that were assists

- Assist eFG%: mean expected eFG% of assists

- %Oreb: percentage of possessions used that were offensive rebounds

- %Turnover: percentage of possessions used that were turnovers

Usage versus Efficiency

(If usage versus efficiency means nothing to you, it’s best you get acquainted with the topic by reading this post by Eli Witus.)

It would be really nice to find a way to model an individual’s usage versus efficiency as Dean outlines in Basketball on Paper, but it isn’t very easy to do. In Eli’s post linked to above, Eli illustrates a nifty way of estimating the effect of individual player usage on a lineup’s efficiency. Eli used data from roughly half of the 2007-2008 season, so I wanted to know what results we get when using the data in the spreadsheet above with data from most of the games from the 2008-2009 season.

See Eli’s post for all of the details, but essentially the actual efficiency – expected efficiency is our response and the sum of player’s usg%’s – 1 is the predictor. For example, a team with an actual efficiency of 110 and expected efficiency of 100 with a total of 1.05 usg% would have 10 as the response and 0.05 as the predictor. The idea is that if there is an an effect of usage on efficiency then we expect to find a positive coefficient for our predictor.

Fitting a model to this data set estimates this coefficient to be 10.9 with a standard error of 2.9 giving us a 95% confidence interval of (5.2, 16.6) and a p-value < 0.01 when testing if this coefficient is zero. Eli’s estimate was approximately 25 with a standard error of around 9 with a 95% confidence interval of (7.4, 42.6).

My estimate suggests that each 0.01 increase in total usg% increases our efficiency expectation by 0.11 points per hundred possessions. This estimated effect is smaller than Eli’s. His suggests a 0.04 difference changes expectations by one point per hundred possessions, where as mine suggests we would need a 0.09 difference to see the same effect. The 95% confidence interval for the usg% difference needed to expect a one point per hundred possession change in efficiency is (0.06, 0.19).

The overall point is that I too find this effect, so hooray for reproducible research!

The Next Step

The next step is to extract individual defensive efficiency ratings from the play-by-play. Once I have defensive ratings, the goal is to adjust the ratings for strength of teammates and opponents. I then plan to examine how these ratings predict future team efficiency ratings, similar to how I examined the predictive capabilities of basic efficiency models.

Is One Lineup Better Than Another?

One part of Wayne Winston’s new book Mathletics that I didn’t really like was the way he compared raw lineup data to determine if one lineup is better than another. After thinking about it more, I think the real reason I don’t like his method is because he compares the lineups’ net points per minute (pts/min).

I’m a big proponent of points per possession (pts/poss), so I wanted to look at how we could compare lineups using raw pts/poss data. I think it is important to put emphasis on raw, as we’re not trying to control for strength of opponent, home court advantage, etc. Although these things have a real impact on the way our data is generated, the goal is to maintain simplicity while still being useful, just like the method Wayne proposes in his book.

Calculating the Difference Between Two Lineup’s Net Pts/Poss

As long as you have the data, comparing the difference between two lineups’ mean net pts/poss isn’t hard (I’m using hard here relatively, of course; for my mom, this would be really hard). That said, it helps to have something that makes the calculations easy. Therefore, I have created an R function compare_lineups() that you can find in compare_lineups.R.

This function takes four arguments:

- l1.o: vector of points scored on each offensive possession by lineup #1

- l1.d: vector of points allowed on each defensive possession by lineup #1

- l2.o: vector of points scored on each offensive possession by lineup #2

- l2.d: vector of points allowed on each defensive possession by lineup #2

Using this data, the compare_lineups() function calculates and reports the following:

- The mean and standard error for the difference in the lineups’ mean net pts/poss

- A 95% confidence interval for this difference

- The z-score of the difference and estimated probability lineup #1’s mean net pts/poss is greater than lineup #2’s mean net pts/poss

This function also returns these statistics in the form of a list. This allows you to do cool stuff like graph the plausible values for the difference between each lineup’s mean net pts/poss.

Application to the Example From Mathletics

To illustrate his method, Wayne gives an example of how he compares lineups on page 225 of Mathletics. In his example, he compares two Cavs lineups from the 2006-2007 season. The end result of his method is that we estimate there to be a >99% chance that the superior lineup has a higher mean net pts/48 minutes than the inferior lineup.

With compare_lineups(), we can now compare the lineups’ mean net pts/poss. To do this, you’ll first need to load the code in compare_lineups.R. Once loaded, you can run the following command to compare the lineups:

res <- compare_lineups(c(rep(0,39+45),rep(1,2+5),rep(2,29+37),rep(3,2+5)), c(rep(0,52+42),rep(1,3+2),rep(2,31+26),rep(3,6+1)), c(rep(0,87+30),rep(1,15+4),rep(2,66+23),rep(3,9+2)), c(rep(0,29+80),rep(1,3+4),rep(2,18+70),rep(3,9+18)))

Running this code will produce the following output:

For Lineup 1 – Lineup 2:

–> Mean: 28.5

–> Std Err: 15.3

–> 95% CI: (-1.5, 58.5)

–> Z-score: 1.86

–> Pr(L1 > L2): 0.9686

This output shows us that the estimated difference between the lineups’ mean net pts/poss is 28.5 pts/100 poss with a standard error of 15.3 pts/100 poss. A 95% confidence interval for the mean difference is (-1.5, 58.5) pts/100 poss, which means we have 95% confidence that the true mean difference is somewhere in this interval.

We’re specifically interested in the probability that the mean net pts/poss of lineup #1 is greater than the mean net pts/poss for lineup #2 (aka a one-tailed test for that inner stat nerd deep inside of you), so the z-score of 1.86 allows us to estimate that this probability is 0.97. In other words, we come to the conclusion that lineup #1’s mean net pts/poss is statistically significant from lineup #2’s mean net pts/poss.

We can also see this visually with a graph. In R,

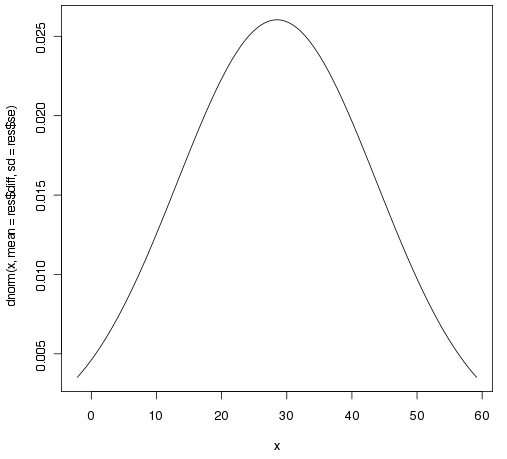

curve(dnorm(x,mean=res$diff,sd=res$se),from=res$diff-2*res$se,to=res$diff+2*res$se)

will generate the following graph of the difference in the lineups’ mean net pts/100 poss:

In this case we arrive at the same conclusion that lineup #1 is better than lineup #2. Depending on the question you’re trying to answer, like determining which lineup you should play in a given situation, I think this really just provides us with a good starting point. It is important to try and understand why this is showing up in the data, as we want to ensure that, for example, the lineup with the better data isn’t showing this advantage simply because they’re playing inferior opponents.

Summary

My hope is that this code will help make it easier to compare lineups on a per possession basis, although you still have to go through and extract the data to use it. This is still just a starting point for comparing lineups, but it does give some evidence with which we want to dig deeper to understand what makes one lineup better than another in a given situation.